I Am Afraid

For the last 5 months or so, I’ve been working on a new networked social VR application called I Am Afraid. This application brings together ideas that have been on my mind for over a year, perhaps longer. Ideas around voice, poetry, sculpture, and performance. Many people asked where the idea for IAA came from and I am surprised that I can’t remember the moment, or a moment, when I decided I wanted to see words and play with sounds in VR. I do know there have been lots of inspirations along the way, including the work I did with Greg Judelman in flowergarden, voice work with my friend Matthew Spears, clowning, theatre, friendly poets (Andrew Klobucar, Glen Lowry), sound artists (Simon Overstall, Julie Andreyev, prOphecy Sun), etc.

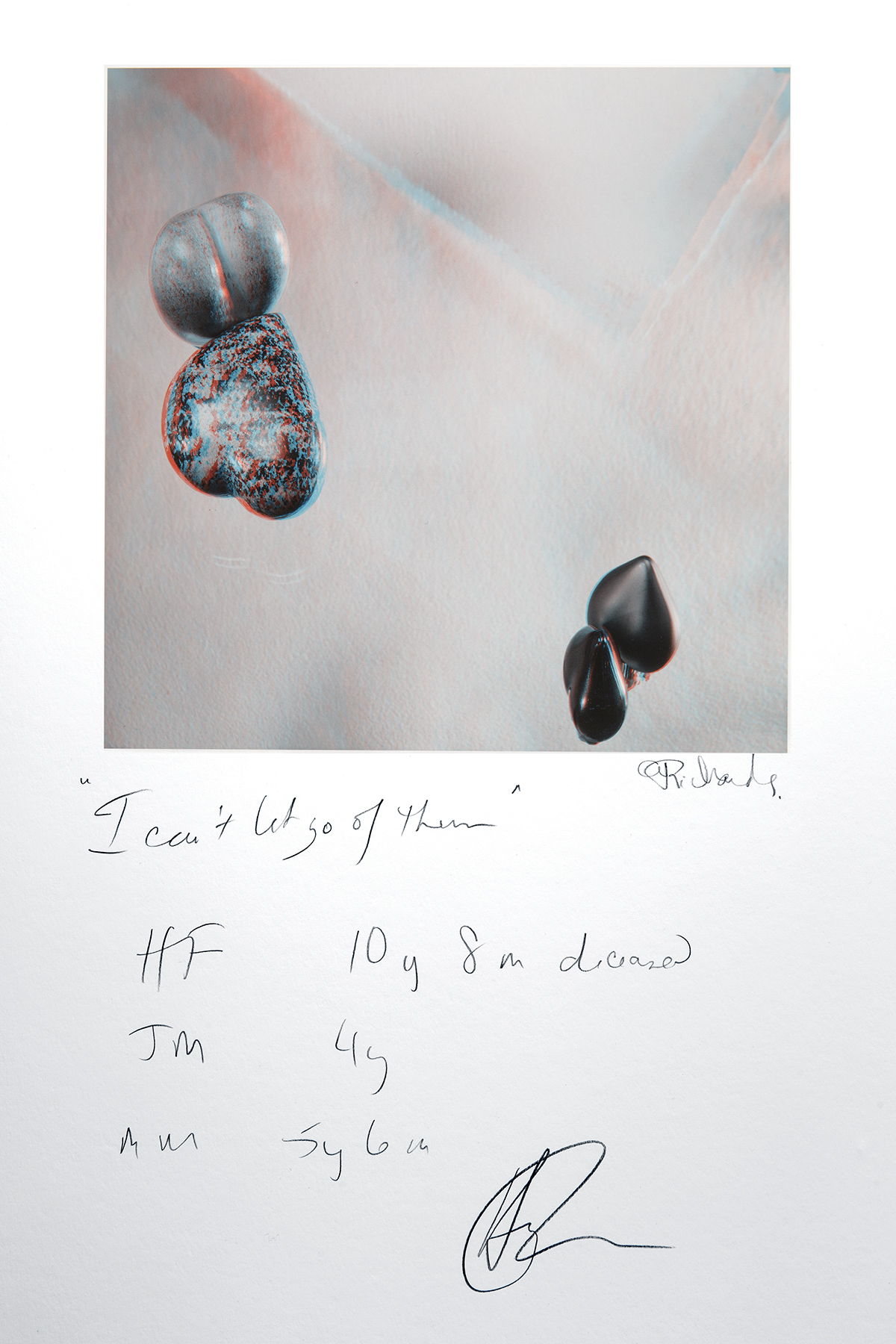

The basic idea is to build sound compositions and sculptures using textual and abstract objects that have embedded recorded sounds. When you are in the environment, you can speak words and have them appear as textual objects, and utter sounds that appear as abstract objects. Both kinds of objects contain the sound of your voice and can be replayed in a variety of ways. By intersecting with the objects, the sounds can be played back. The textual objects can be played in parts and at any speed/direction, using granular synthesis. The abstract sounds can be looped. Paths can be recorded through the objects and looped. In this way layered soundscapes can be created. The objects can also be interacted with in different ways like shaking and moving which alters the sound quality. Other actions are also planned, fleshing out a longstanding idea around a sonification engine based on the physicality of interaction with words.

I am often asked why the application is called I Am Afraid. As I was starting work on the application in January, I could sense an escalation of fear in the world, in my surroundings. I have been exploring fear for the last 17 years through different paths including meditation and art. One of the features of fear is that when we feel it, when it grips us, we start talking to ourselves. This is a bit a trap because we get more and more removed from what is actually going on. One of the goals of IAA is to externalize the discursiveness and be playful with the words and sounds. It can be a way to lighten up and see things more clearly, shift the internal dialogue. And it’s fun.

I used the application during my TEDxECUAD talk last March, which is about fear and technology. I’ve also used it in a performance at AR in Action, a conference at NYU at the beginning of June. It’s a great environment for performance (solo or group), exploration, and composition. I’ll be working on it for some time to come, adding features and (hopefully soon) porting it to Augmented Reality.